The right form factor for AI coding

From what I can see we currently have two main ways for software engineers to use AI for coding:

AI-assisted coding

You steer the development process as you would without AI, opting in to various forms of AI-driven completion to finish faster.

Typical form factor: An IDE with AI sprinkled in, because you need familiar IDE functionality as you drive the session.

Examples: Copilot, Gemini Code Assist, most of Cursor

Agentic coding

You explain what you want to accomplish, and the agent explores your codebase until it finds a solution, making many of its own choices along the way.

Typical form factor: A bare-bones terminal app. You don’t need a file browser because you’re not the one browsing files.

Examples: Claude Code, Cursor’s agent mode

(I am choosing to fold “vibe coding” into “agentic coding” here. I’m uncertain that there’s any difference between how the two terms are used today, except for VPs of Marketing telling their CEOs that enterprises aren’t going to pay for a product with the word “vibe” in its description.)

After using Claude Code for a few months, I’m firmly in the camp that believes agentic coding is the way forward. Let me try to explain why.

What decisions should the AI own?

Last year, I signed up with GitHub Copilot and then discarded it after a few months. Its autocomplete was occasionally amazing, but overall it didn’t feel like enough of a gain to be worth disrupting my workflow.

This year, I dove into Claude Code and was hooked within days. The learning curve was steep, but the improvements in development speed, design quality, and my mental energy were easily worth it.

When you let the agent do its own thing, you’re giving it more control over the design. What are the responsibilities of this new class? What do we name this method? Is it better to slightly modify some pre-existing code, or write something new even if it starts out looking similar?

And in my experience, Claude Code is as good at design as it is at implementation. That is, it’s right about 75% of the time, and it’s fast enough that my work improves overall, even when budgeting time for correction. (And I’m assuming many of the other agentic tools are roughly the same.)

Obviously you still need to stay involved somehow. Anthropic’s “explore, plan, code, commit” recommendation is roughly how I do it. The “plan” phase turns out to be fairly useful: You can find and correct design mistakes pretty quickly if you don’t spend a ton of time writing code.

Also, is it possible that letting the AI own the whole thing, from design to implementation, leads to better results? After all, anyone who insists there’s an unambiguous boundary between design and implementation is probably selling you something.

AI-assisted coding is short-term skeuomorphism

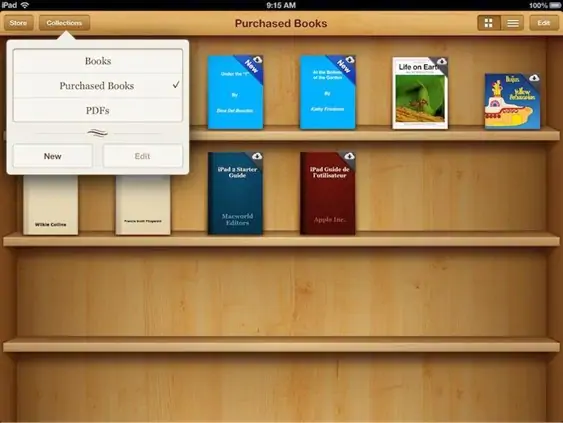

I keep coming back to the idea of skeuomorphism, a design concept where your new design retains cues of an older solution. Software used to be full of these designs, as we moved many physical interfaces into bits.

Eventually, a lot of that of that skeuomorphic design went away. In retrospect, it seems like it was a practice that eased anxieties as human society digitized at a dizzying rate. Today, those of us who read ebooks are happy to do so on e-ink devices that don’t even have page counts. Pretending these books are printed on paper is just an unwanted ornamentation.

That’s how I think of AI-assisted coding today. The transition to coding with AIs is arguably the largest change in the software engineering craft in decades. It’s understandable that many want to stay in control in an IDE, but I think that’s holding them back.

But what about quality?

Quality is a key reason that so many want to keep a close eye on the AI, and that’s understandable. These are uncanny tools that pepper their work with comically inscrutable mistakes, and nobody wants that stuff to get to production.

To which I would only say: Software quality has always been a multifaceted challenge. And software organizations have long been required to evolve their quality processes as they have increased headcount, switched languages and frameworks, changed architectures, etc.

AI coding represents a massive opportunity to improve software development’s cost, quality, and speed. But to get over the hump, software engineers will need to interrogate how they ensure quality in their work, individually and within organizations. And then they’ll need to make as many of those practices as possible legible to the AI.

What does that take? In the next few years, we’re all going to find out.